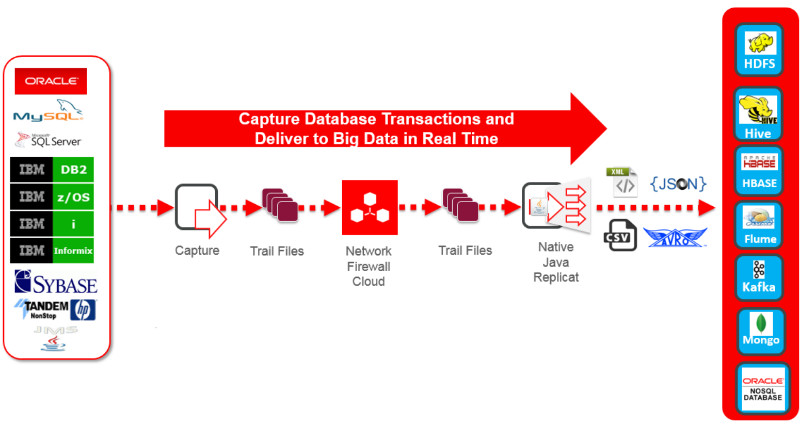

Oracle GoldenGate for Big Data 12c product streams transactional data into big data systems in real time, without impacting the performance of source systems. It stream lines real-time data delivery into most popular big data solutions, including Apache Hadoop, Apache HBase, Apache Hive, Apache Flume and Apache Kafka to facilitate improved insight and timely action.

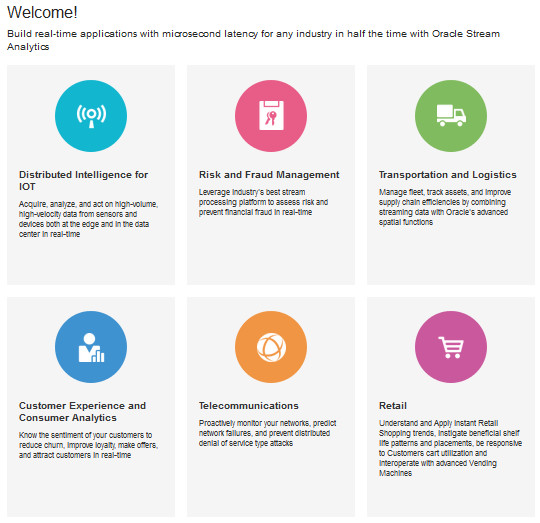

The Oracle Stream Analytics (formally Oracle Stream Explorer) platform provides a compelling combination of an easy-to-use visual façade to rapidly create and modify Real Time Event Stream Processing applications, advanced streaming analytical capabilities together with a comprehensive, flexible and diverse runtime platform to manage and execute these solutions.

I’m writing this blog to show you how Oracle GoldenGate for Big Data can integrate seamlessly with Oracle Stream Analytics in order to create real-time customized explorations and make use of pre-defined analytical patterns to understand your data stream the second it happens. In the following demo, I have used Oracle Big Data Lite VM (4.4), as it has the necessary Apache frameworks, Oracle Database and Oracle GoldenGate installations, which I’ll be using throughout the demo.

On the same VM, I’ve installed and configured Oracle Stream Analytics (12.2.1). The installation and configuration is quite simple and can be found here. Also, I have used Oracle GoldenGate for Big Data (12.2.0.1.3), rather than the one that is already installed on the VM, to stream transactional data into Kafka (0.9.0).

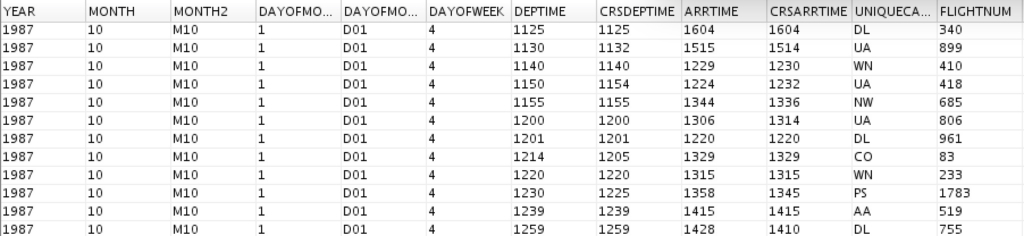

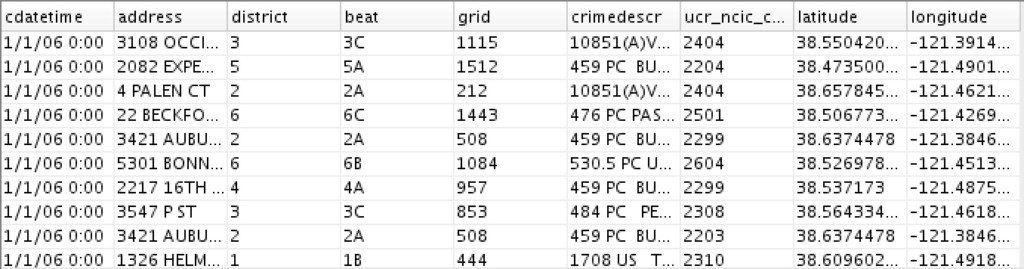

For this demo, I’m going to use two sample datasets: flight schedules and the Sacramento crime records (made available by the Sacramento Police Department). I’ll be streaming those data sets into Oracle Steam Analytics, create some pattern exploration, plot precise geolocations to help me answer some questions and explore the possibilities.

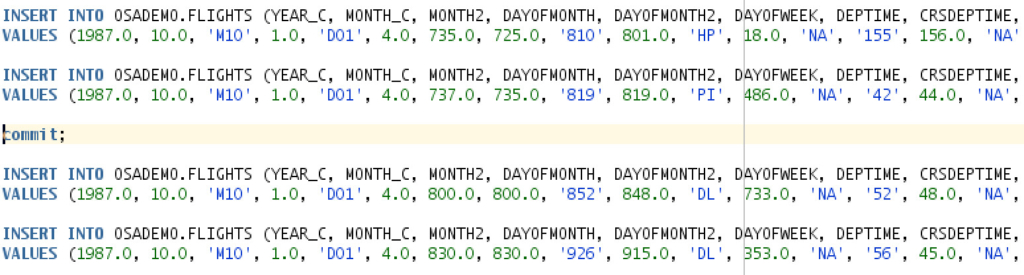

Here is a sample output for the flight schedules dataset we’ll be using:

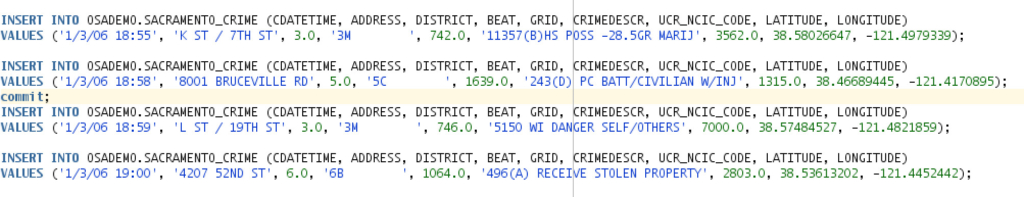

And a sample output for the crime records dataset:

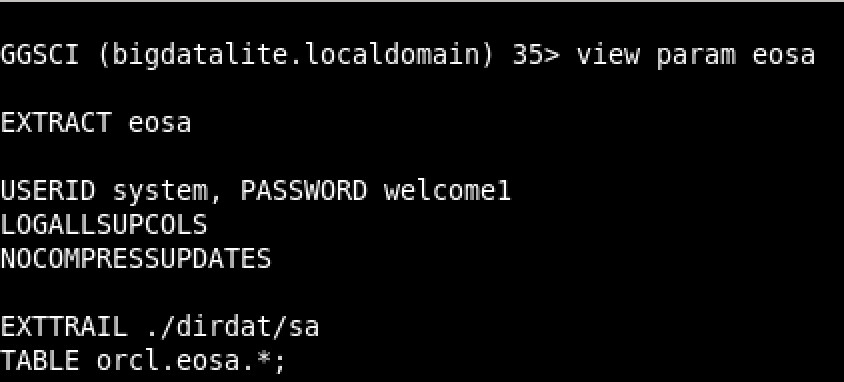

Let’s get into the configuration here, and start with Oracle GoldenGate. Oracle GoldenGate will be capturing transactional data (Inserts/Updates/Deletes) from Oracle Database, from two tables, as per the following configuration:

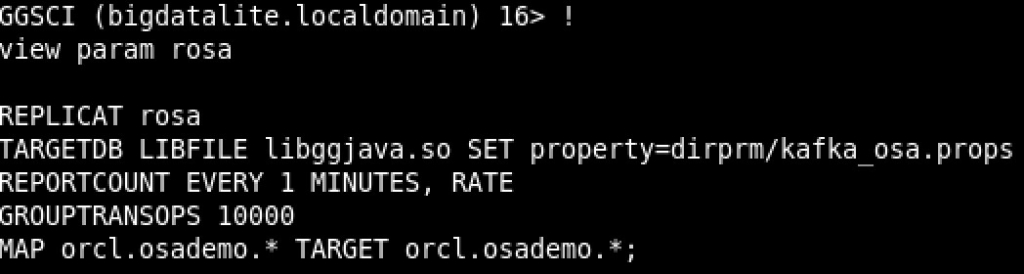

The target, Oracle GoldenGate for Big Data, will be producing these captured transactions into a predefined Kafka topic (we’ll come to that later). The replicat (delivery process) parameter configuration looks like the following:

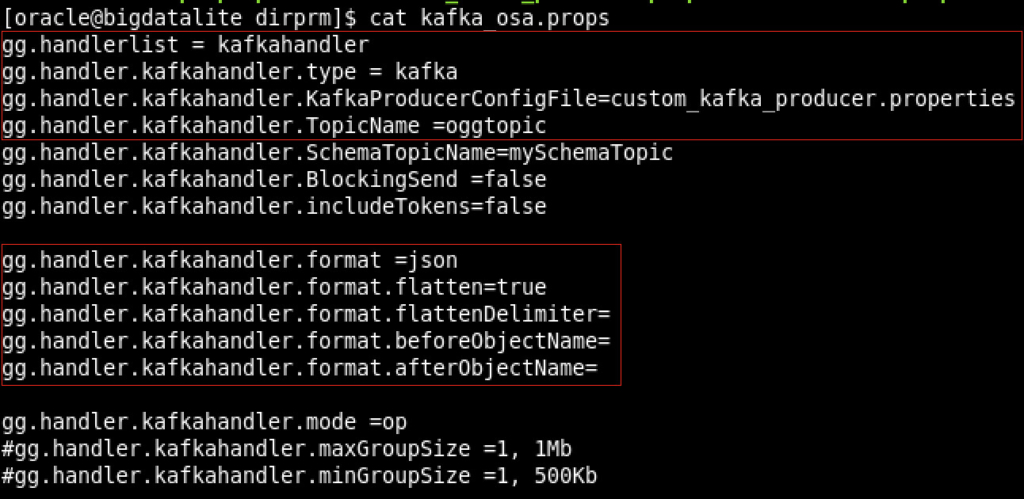

As you may have noticed, the parameter file points to a kafka_osa.props file, which defines how the stream producer (by Oracle GoldenGate) will work, i.e having the messages in JSON format. Here is a snapshot of the kafka_osa.props:

Notice the following:

1. I’ve selected “kafkahandler” as a handler

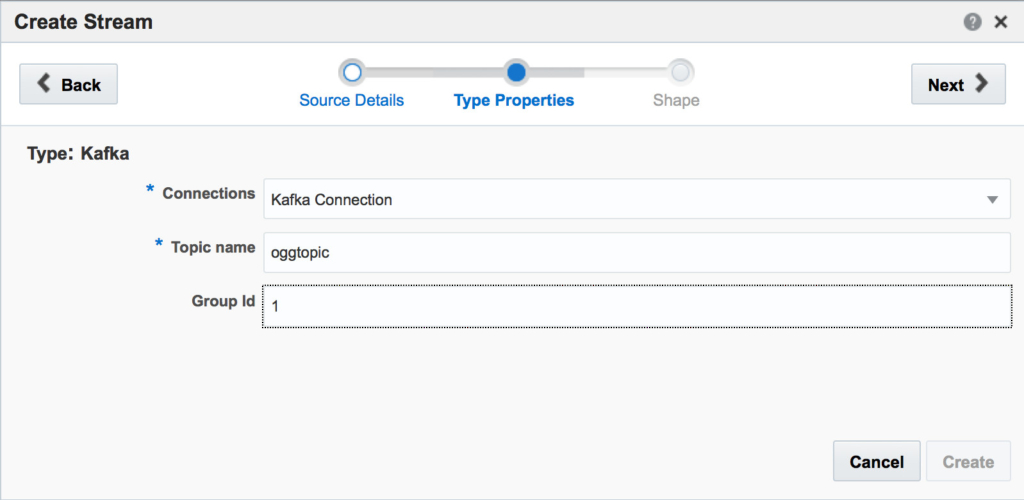

2. The topic Oracle GoldenGate will produce to is called “oggtopic”

3. The configuration is pointing to a producer config file, which we’ll explore in a second.

4. The formatter is set to have “json” as output, and it’s going to be flattened (so that Oracle Stream Analytics can read it).

5. I’ve cleared any postfix/prefix (for presentation purposes).

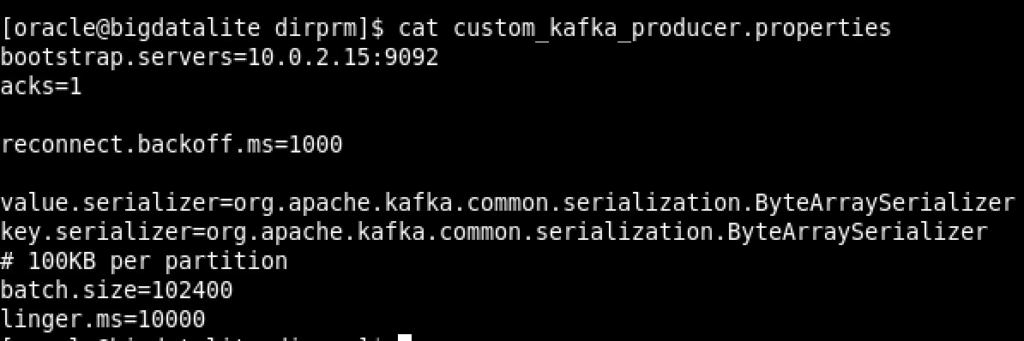

Now let’s a have a quick look on the custom_kafka_producer.properties:

The Kafka producer points to the Kafka server; in my case it’s on the same VM, which we’ll start in a second

** Note that the sample template file provided by Oracle GoldenGate for Kafka producer has a “compression.type=gzip” property which I’ve removed, as Oracle Stream Analytics need uncompressed stream flow.

Next, let’s start the Kafka server:

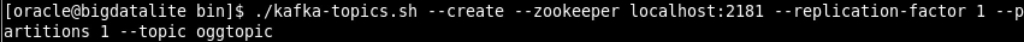

Since Oracle GoldenGate for Big Data produces JSON messages to a Kafka topic, it needs to be created:

I’ve chosen the name “oggtopic“, you’re free to choose any other name you want. Now the capture process, replicat (delivery) and Kafka processes are up and running, it’s time to jump into Oracle Stream Analytics.

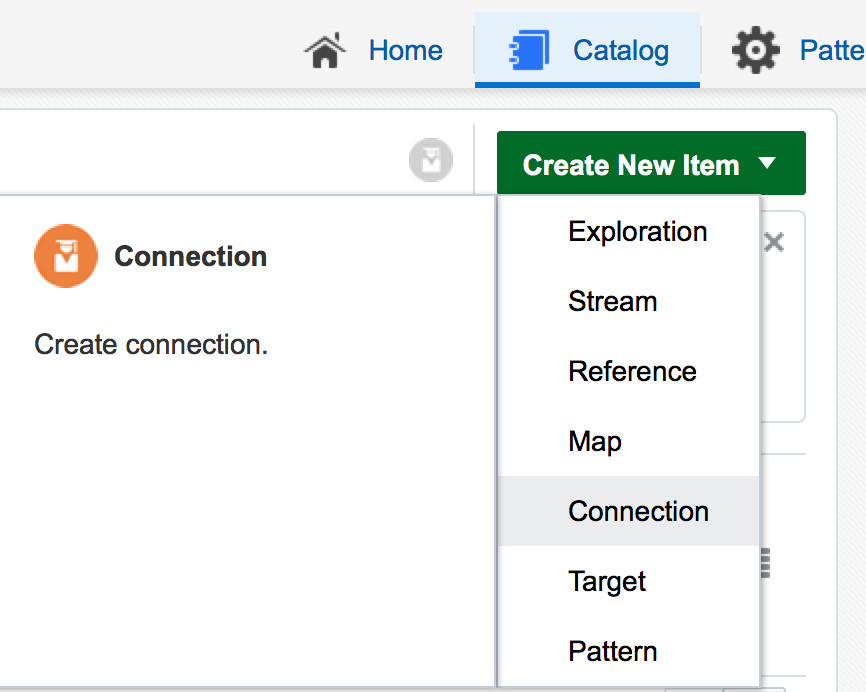

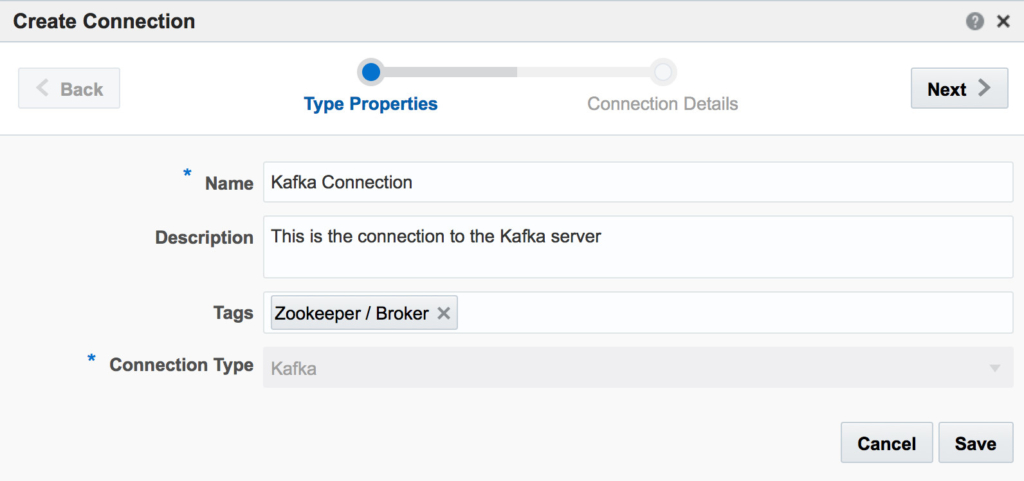

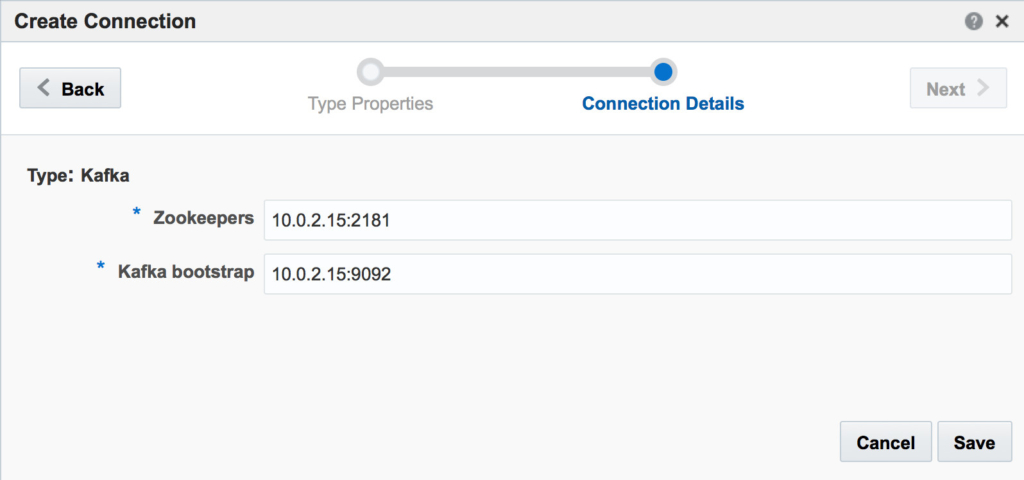

First, create a connection to the Kafka server:

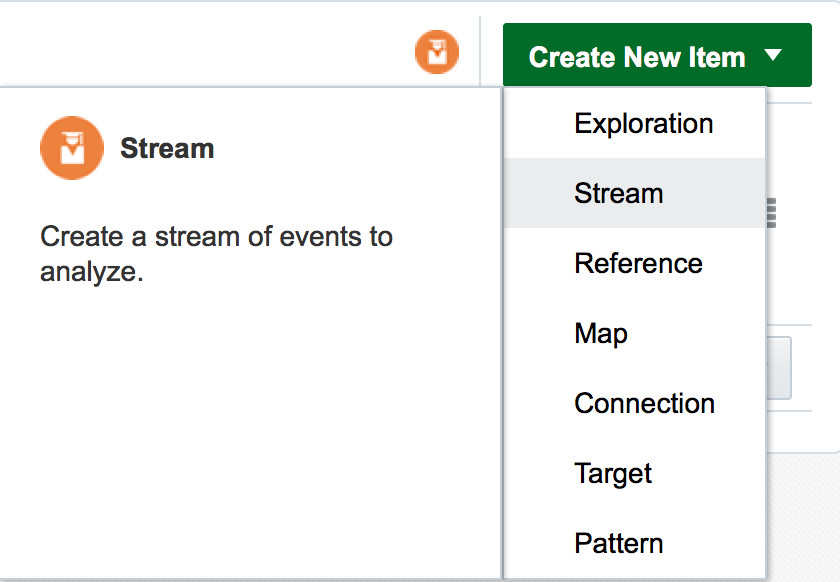

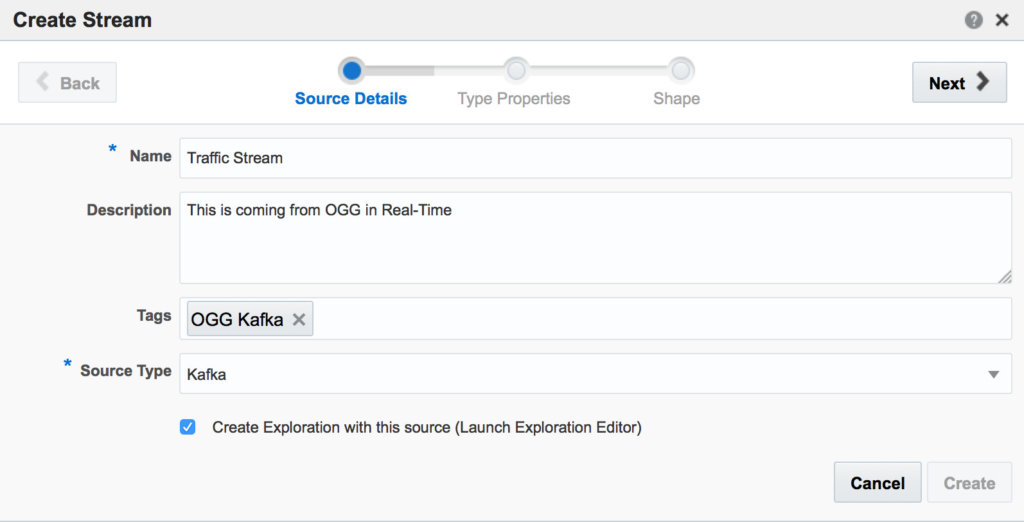

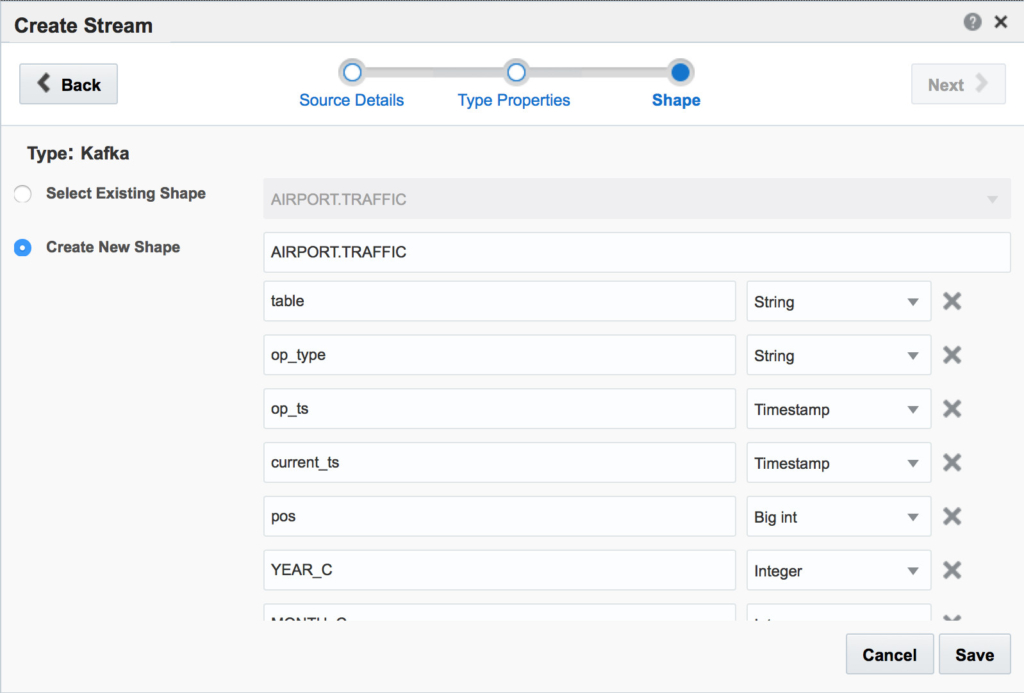

Next we need to create a stream, which is basically a consumer for the Kafka topic, oggtopic, that we’ve created earlier:

**Shape is how the JSON message looks like when picked up from the Kafka topic.

You may create other/more streams depending on how your data looks like. For the sake of this blog, I’ve created another stream called “Crime Stream” (using exactly the same preceding steps) which we’ll use later on.

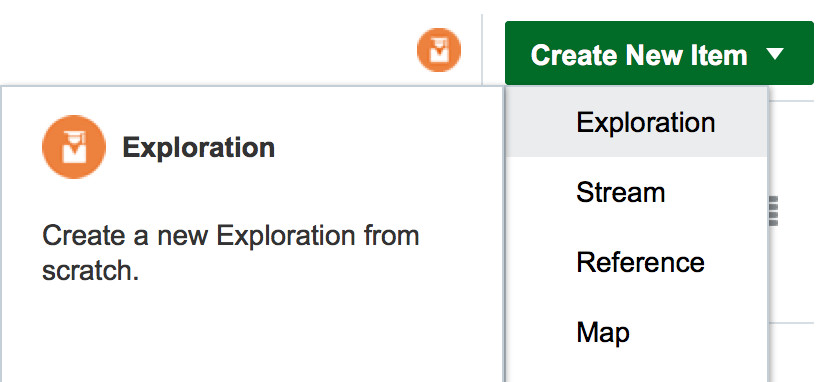

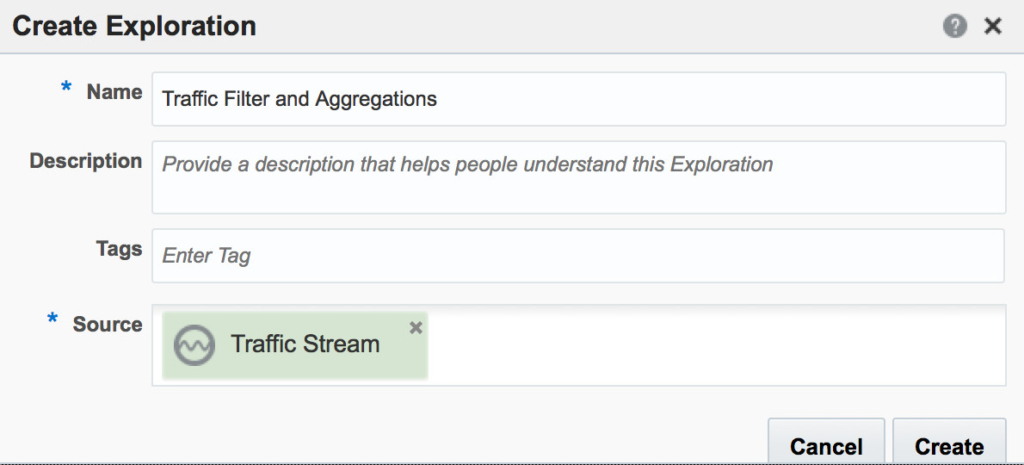

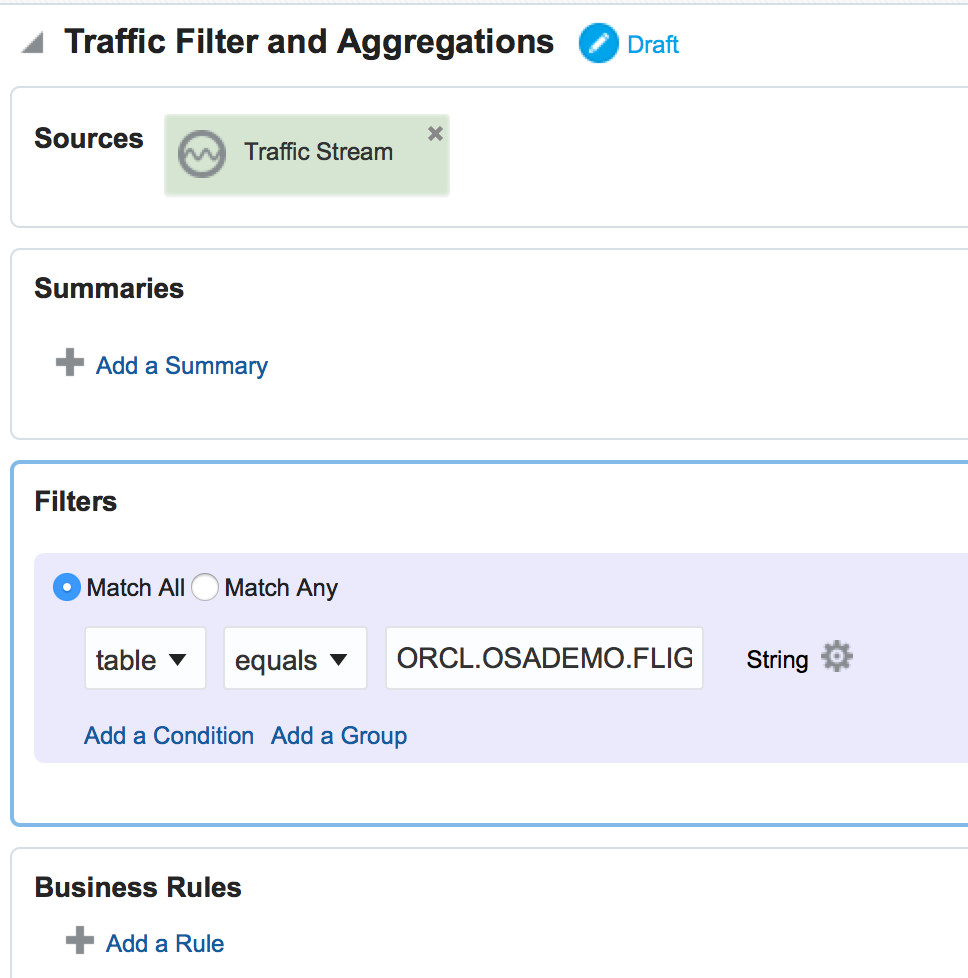

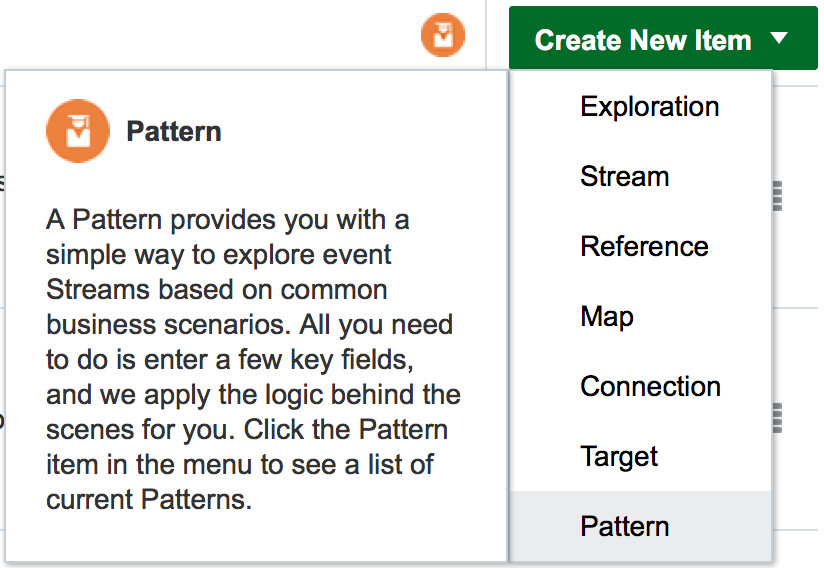

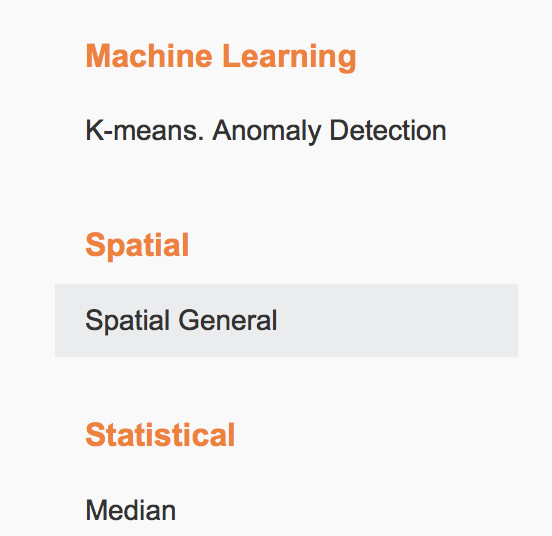

Oracle Stream Analytics provides tremendous filtering and aggregations capabilities. One can create an “Exploration” and customize it to be used by any of the many “Patterns” available. Let’s create an exploration which filters our “Traffic Stream”:

The filter I’ve added is very simple; this exploration will simply show only transactional data that is coming from the table “ORCL.OSADEMO.FLIGHTS”. I’ve created another exploration for the purposes of this blog using the same preceding steps which will filter another table in order to be used with a specific pattern.

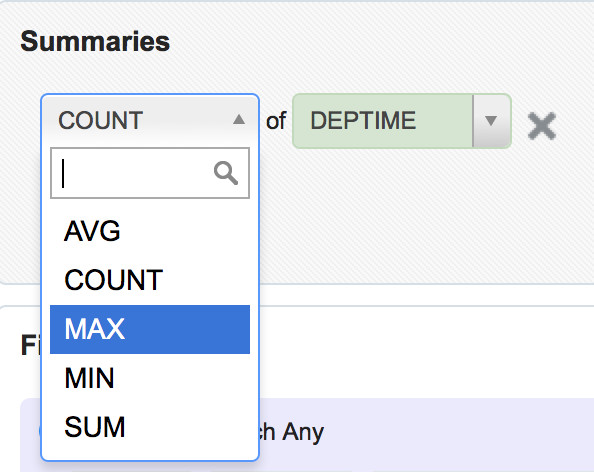

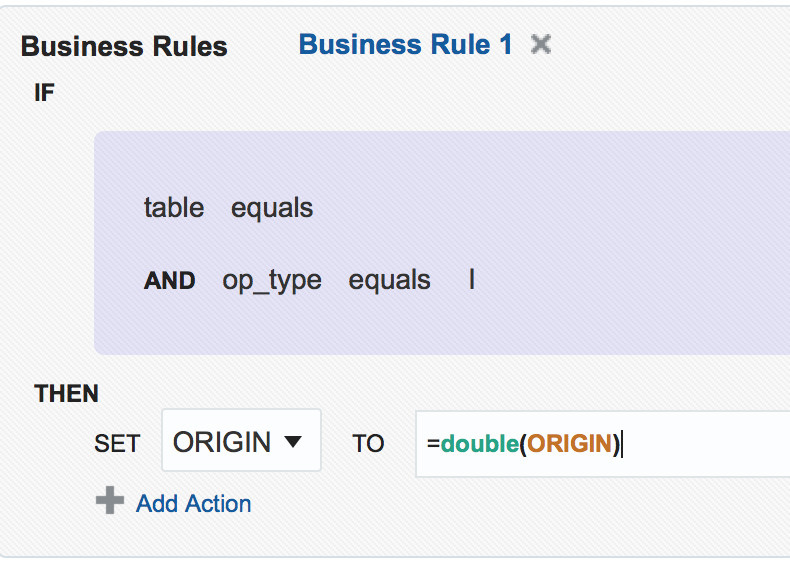

In explorations, you may add business rules, summaries and aggregate your values:

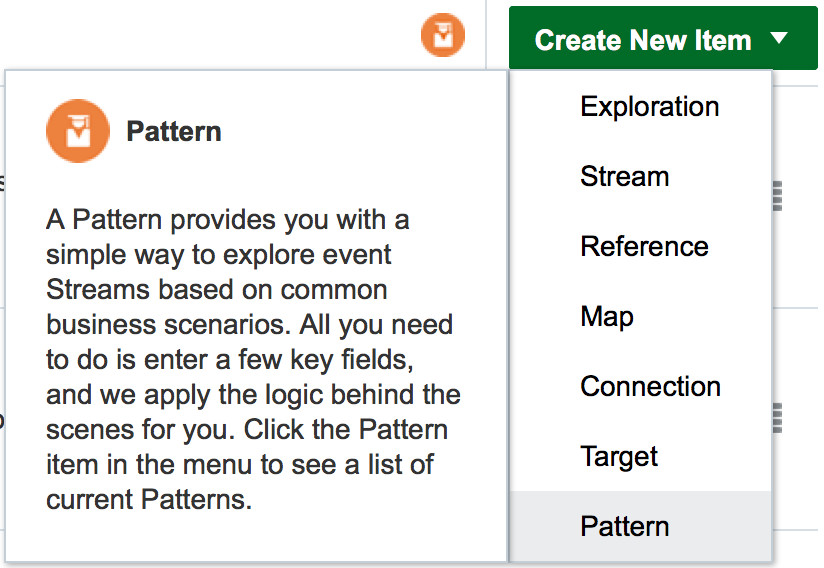

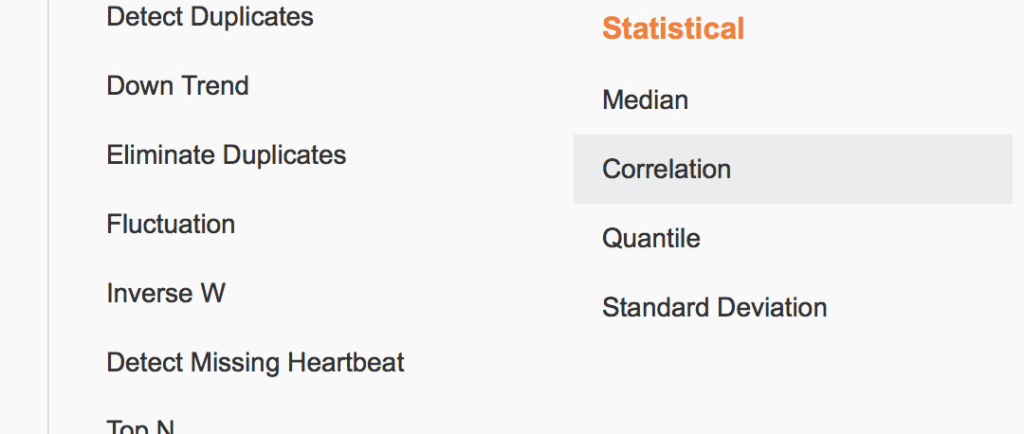

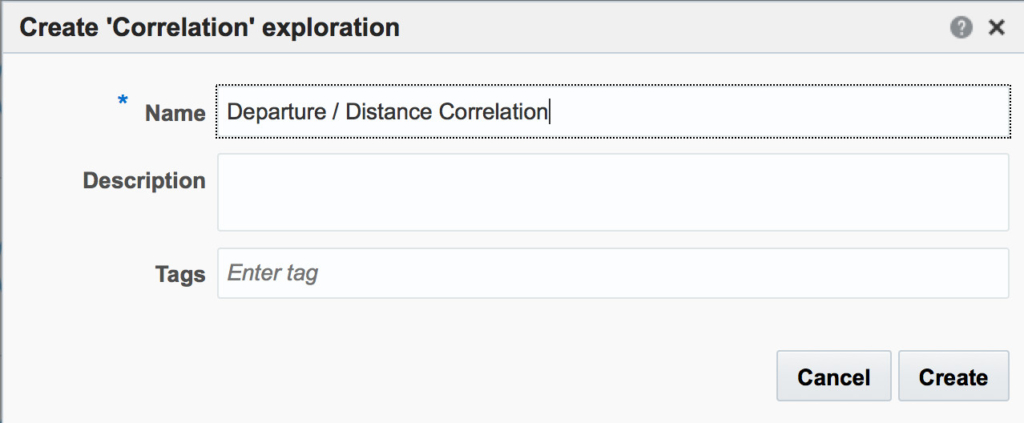

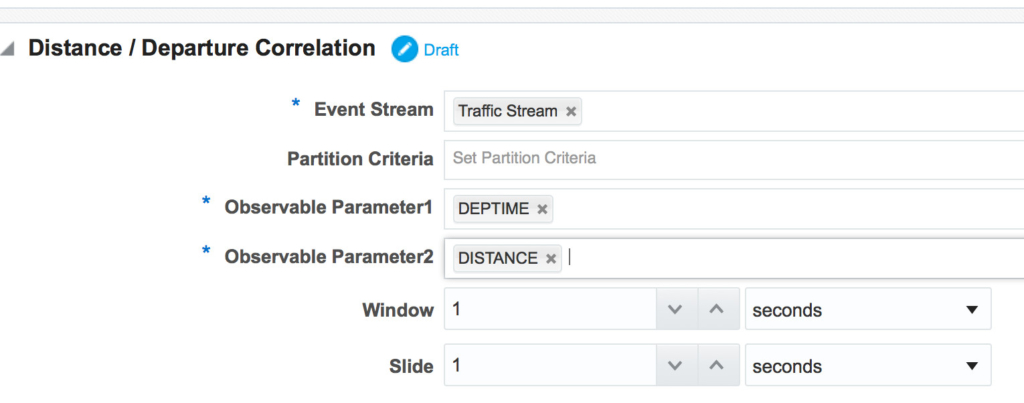

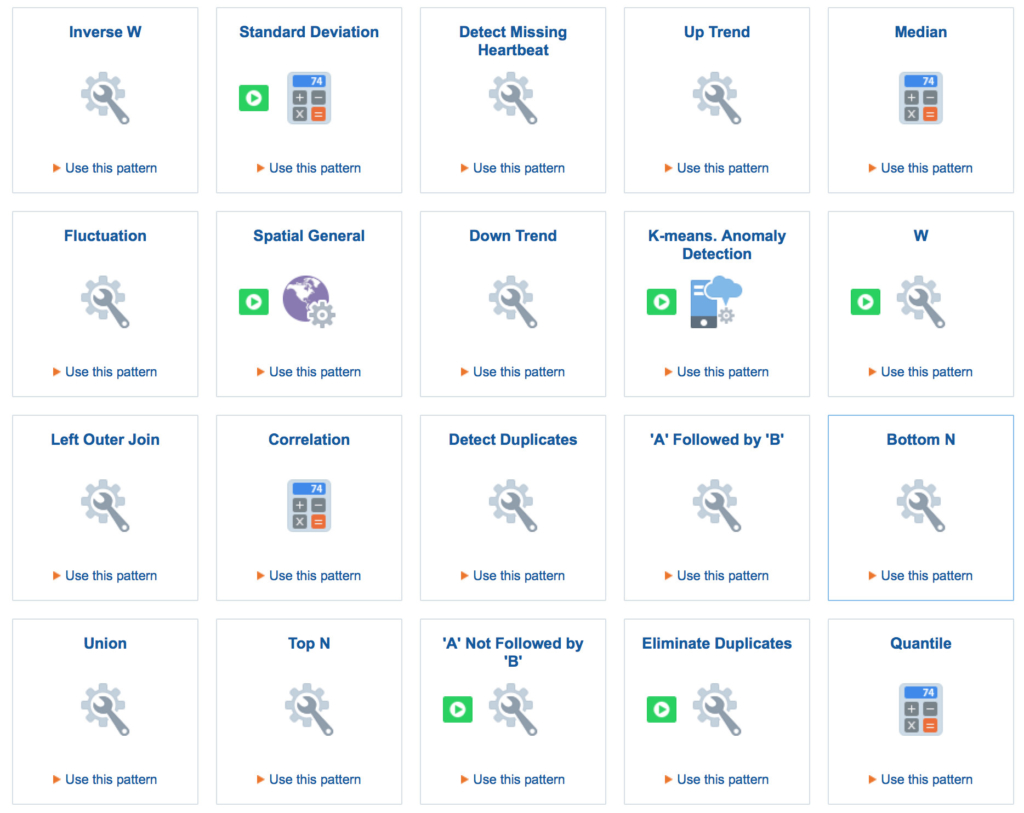

Now Now that the streams are configured and ready, we can create an empty exploration and customize it or choose one of the too many out-of-box pattern explorations to analyse the stream. Let’s use the “Correlation” pattern exploration in order to understand if there is a relationship between departure time and distance values in the flight schedule dataset :

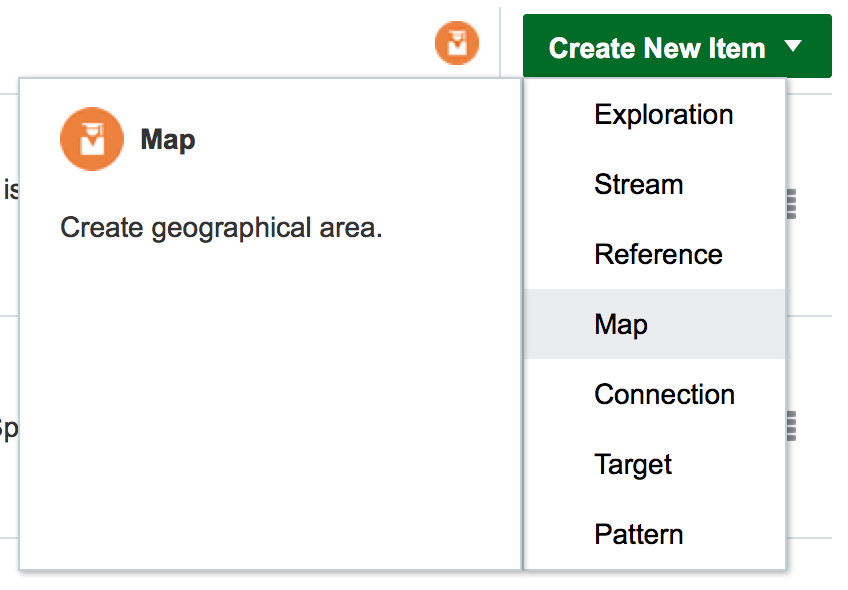

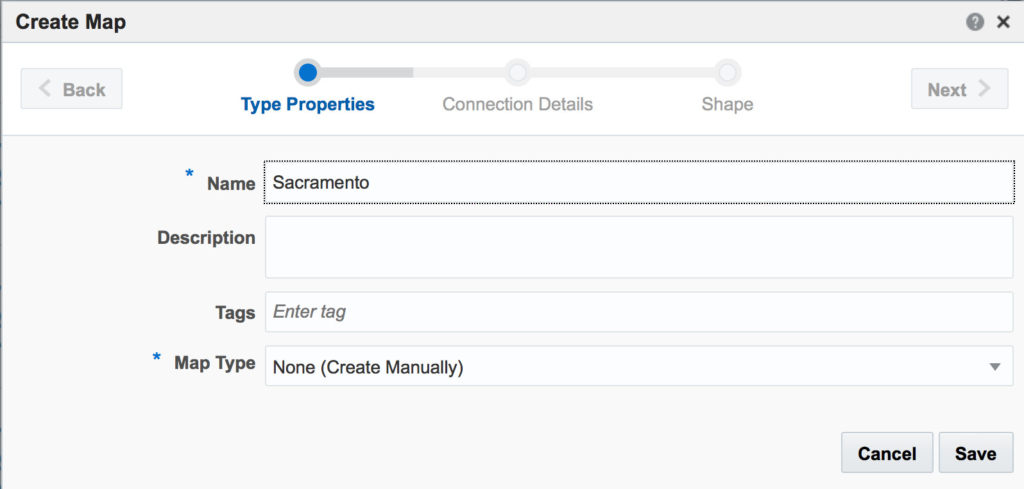

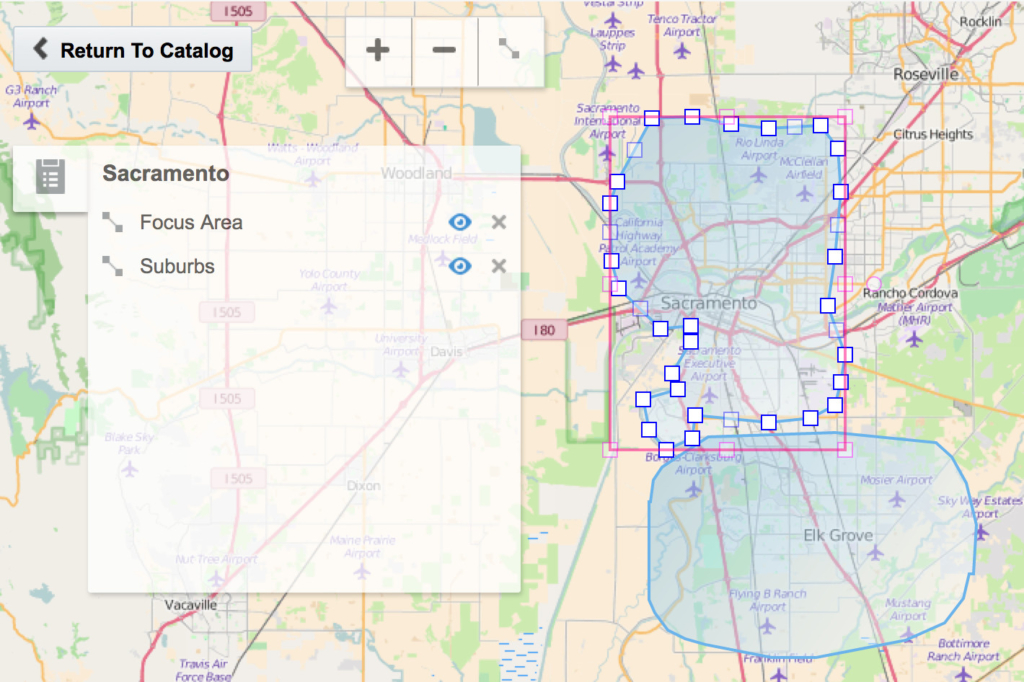

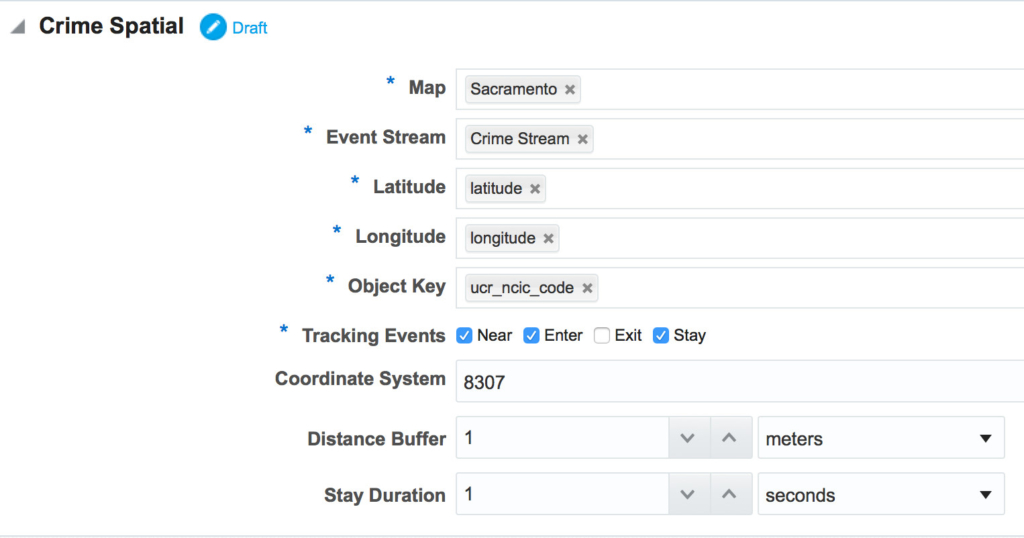

Now let’s use another pattern and create a new “Spatial Pattern” exploration to plot and analyse the crime records dataset for Sacramento. First, we need to create a map (a couple of minutes, fun, exercise):

Finally, let’s create and configure the spatial pattern, and have the preceding map used by it:

For detailed steps on how to use this pattern, and others, you may refer to Oracle Learning Library here and search for “Oracle Stream Analytics”.

Moving on, now we have everything ready, all we need is to simulate the transactional data creations (aka inserts/updates/deletes) in our source Oracle Database. For that, I’ve created two scripts to insert thousands of records into the two tables Oracle GoldenGate is capturing from:

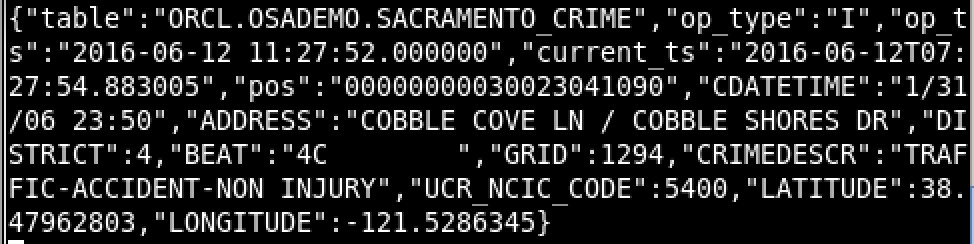

Running the scripts will generate thousands of transactions, which will trigger Oracle GoldenGate to capture and produce fast data into the Kafka stream (oggtopic). Here is a sample output for the the flight schedules produced messages:

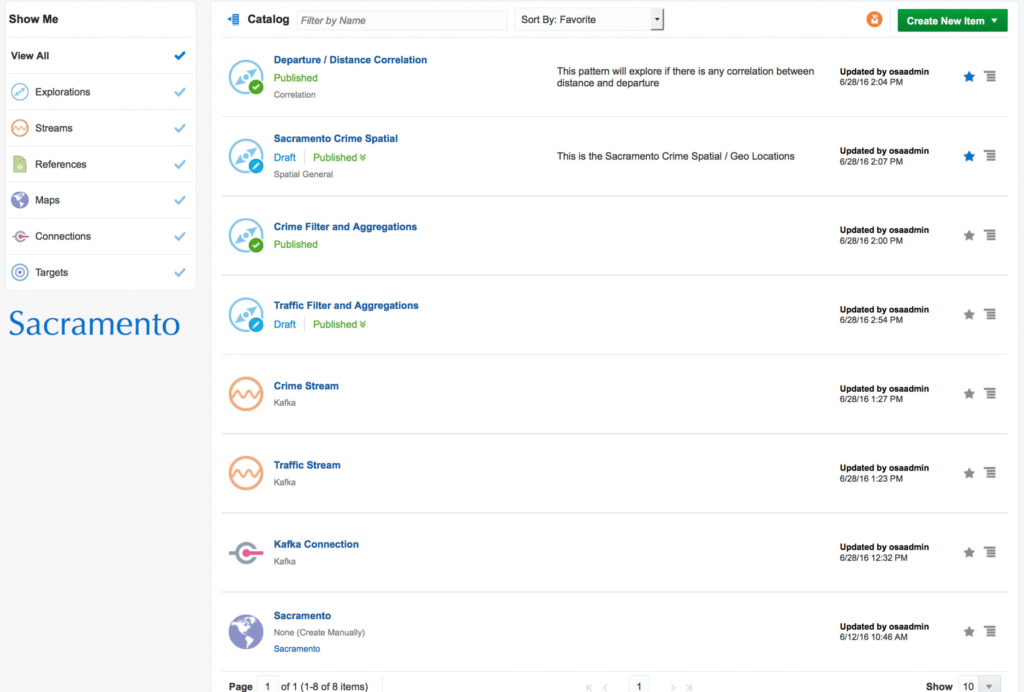

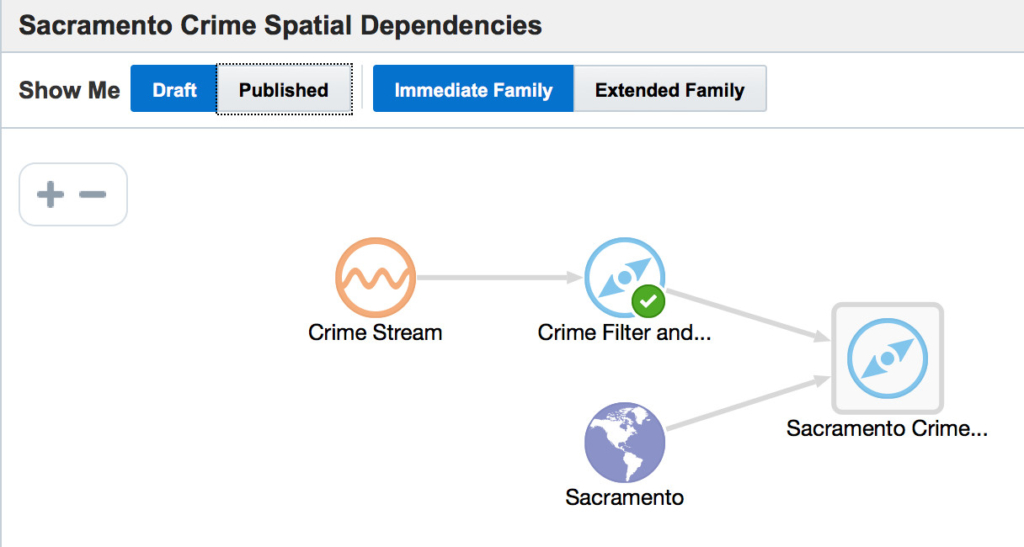

As you may see, the JSON is flattened and ready to be used by Oracle Stream Analytics. Here is a look at what have been created so far:

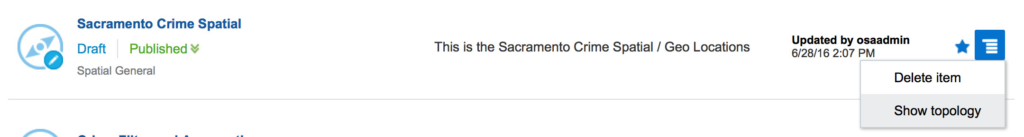

Let’s see how the “Sacramento Crime Spatial” topology/flow looks like and its dependencies:

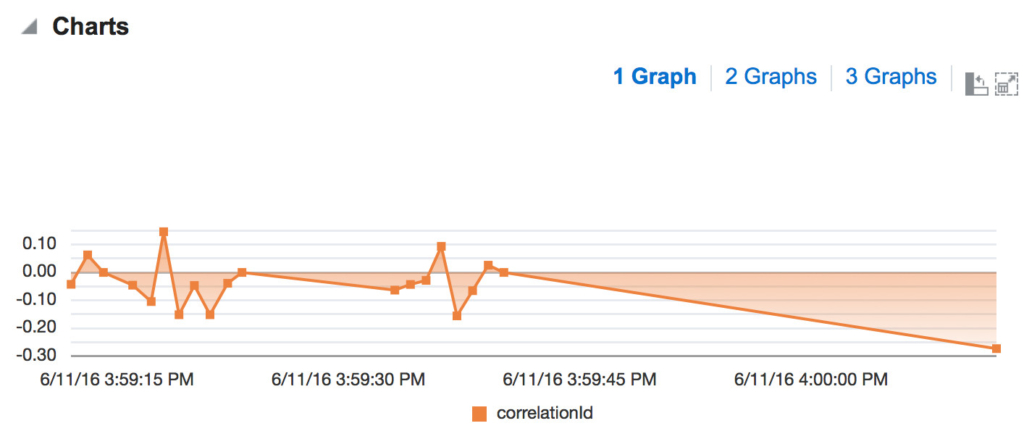

Time to check the results and explore our streams. Let’s check the “Distance / Departure Correlation”:

The result is quite easy to understand, it tells us that distance actually influences the departure time at some occasions.

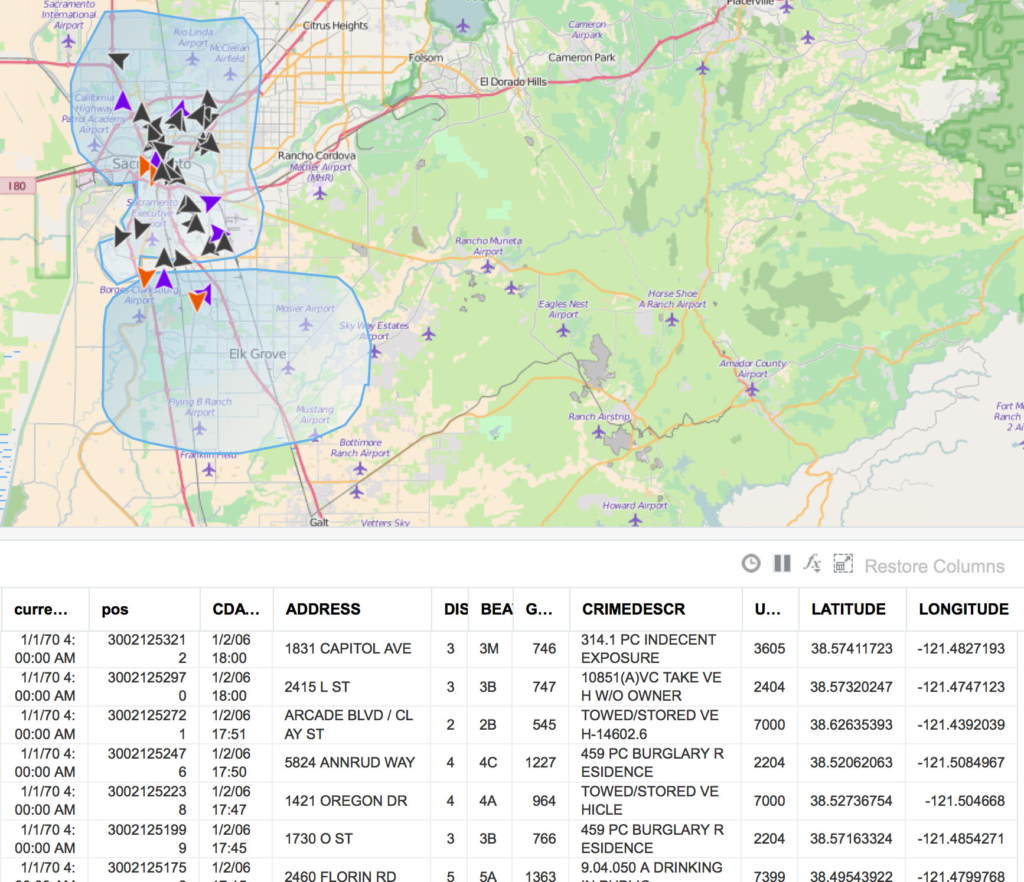

Now let’s jump into the other exploration we created for the crime records:

That’s a LOT of quite precise information flooding in the stream and being plotted on the map in real-time, instantly. While I’m writing this, the stream was still running with fast data coming, and being plotted on the spot, with sub-second latency. This is a great example for organizations that work in law enforcement, logistics, banking (ATM/Payments/Online/.. transactions), IoT, etc…

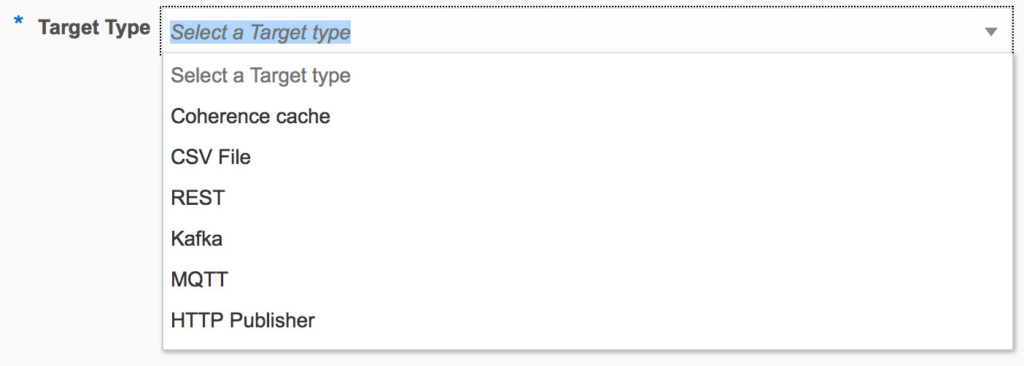

Oracle Stream Analytics does not only allow you to consume streams, but also to produce ones. You may export ANY of your streams in real-time to many targets:

I can make further analysis, use many of the out-of-box patterns, customize exploration using certain business rules, filters and use group-by functions. What I’ve shown here is very small part of what you can do with Oracle Stream Analytics, as I was aiming more to show you the seamless integration between Oracle GoldenGate and Oracle Stream Analytics. You may refer to Oracle Stream Analytics official page to learn more on what you can do.

Conclusion

Oracle GoldenGate for Big Data is a reliable and extensible product for real-time data, transactional level, delivery. It integrates very well with other streaming solutions such as Oracle Stream Analytics which offers very comprehensive stream analytical capabilities to help you explore real-time data and make decisions.

The possibilities you can do with your real-time streaming data are unlimited, and the value that can be derived from that is stupendous when you use Oracle Stream Analytics.

Are you on Twitter? Then it’s time to follow me @iHijazi

Other articles you might be interested in:

Streaming Transactional Data into MapR Streams using Oracle GoldenGate for Big Data

Big Data in Action: ODI and Twitter

Big Data & Oracle Enterprise Metadata Management