Being in the field for quite some time now and meeting different customers and technology people across the globe have brought to me several conclusions when it comes to how they observe the trend of “big data”. Some see it as “the future”, others think it’s some sort of “rocket science” while the rest are ready to start their journey with it but not sure how. A common problem I’ve noticed is the lack of confidence when it comes to making the right choice, a firm one.

The confusion they have was due to several reasons: many information on the web which are not standardized, the continuous updates to the technology and its projects (specifically Hadoop) and the offerings made by others claiming the “know-how” and the “ever-after” success. And of course, the risk of $$$ involved.

Let me tell you the real deal about big data and what you should be considering when deciding to start your big data project.

Do Not Reinvent The Wheel

Regardless of what Big Data is about, what you read, heard and will know later, do not recreate something that already exists. Do not start building your own “Big Data” cluster from scratch. Why should you worry how it works, optimized and maintained? Isn’t it more profitable to spend your money, time and resources in the outcome of what “big data” is about to tell you?

At Oracle, we’ve got the wheel ready for you to plug and play. An engineered system, Oracle Big Data Appliance, that is integrated, optimized, and tuned to shorten deployment time and reduce risk.

Read this white paper to learn more and have a look at this 3-D demo for Oracle Big Data Appliance

Work Smarter, Not Harder

While having your Big Data infrastructure ready, that’s from Oracle or non-Oracle, it’s not the end of your “success” story, it’s just the pre-foundation. You still need to know how to deliver “raw” data into your Big Data technology (let’s assume it’s Hadoop), know how to process your data within Hadoop and eventually offload your data to make reasonable decisions via graphs, analysis and statistics.

Hadoop, as a Big Data technology, is diverse with many projects built on top of it. It’s an open-source, after all. You will need to keep up with all updates and enhancements. You will need to “code” and configure. You will need to optimize and keep learning. I’m not against “open-source” projects, but I know for sure the risks involved when using them.

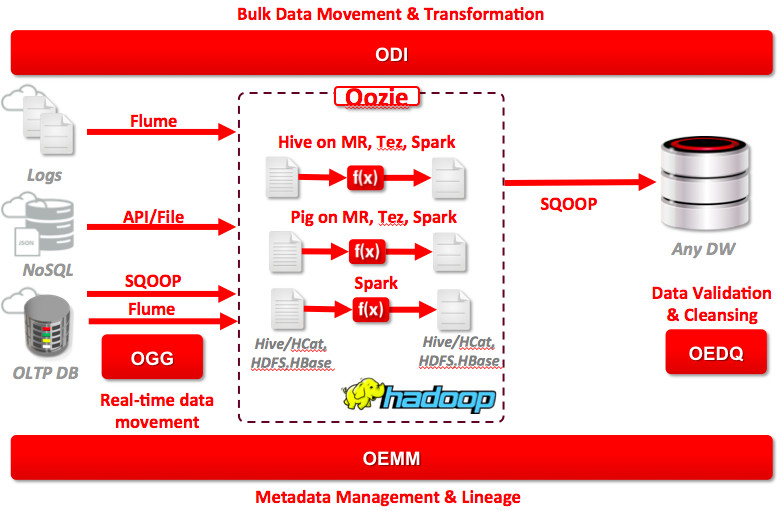

Oracle, from “Data Integration” perspective, has made sure to cover every aspect of data movement, transformation, organization, quality and governance using best-of-breed integrated solutions:

Oracle Data Integrator: For bulk data movement and transformation across heterogeneous environments. This is what you need to move data into Hadoop, transform within and offload to some relational RDBMS.

Oracle GoldenGate: For real-time data movement within a heterogeneous environment. And Veridata for data integrity and consistency validation. GoldenGate will deliver transactions from your OLTP to Hadoop in real-time.

Oracle Enterprise Data Quality: For data standardization, parsing, cleansing, deduplication and validation. After all, we all want to make sound reports, no?

Oracle Enterprise Metadata Management: For life cycle management, data lineage, impact analysis, business glossary and more.

Those solutions are fully big data enabled. To have a better understanding of how they’ll help in your upcoming Big Data project, have a look at the following illustration:

GoldenGate delivers transactions from OLTP in real-time to HDFS. ODI helps you move data in bulk into Hadoop, transform within Hadoop and offload outside Hadoop. OEDQ helps you cleanse, standardize and validate your data. And OEMM helps you in tracing data lineage, analyzing data impact, lifecycle management and building a business glossary across your entire enterprise infrastructure. All of them giving you full data governance.

The middle-tier “big data” engine could be an Oracle Big Data Appliance, or any customized Hadoop cluster. One more thing worth mentioning here, Big Data technologies (say Hadoop) is not a replacement of relational data warehousing. Hadoop is used for data with less value, where there is no relations, unstructured and most importantly not “urgent” to process/access. It’s not made for real-time queries. It’s not made to replace “transactional” databases. Limitations of Hadoop is subject of its own, which you need to really know about.

Listen to The Experts

Who is the expert in the data domain? No seriously, who is the database leader since more than three and a half decades? Oracle. If there is anyone who knows “data” best, that would be Oracle, no single doubt. I think I made my point clear… No?

Don’t waste your time and money in “promises” of others. We do what we do better: Low risk, high scalability and most importantly complete offering!

Time’s Now

If you heard that “big data” is the future, that’s wrong. Big Data is the present. If you think you still have time to get in the ride later, you’re wrong. It’s already time. Now is the time.

Staying on top and being the leader requires you to start working with big data right away. You’re missing a lot, others are already in the fast lane.

Drop me a message if you have any questions or a special use-case that you’d like to discuss. I’d be more than happy to assist you or direct you to the right resources.